A recent colleague of mine was fond of pointing out that “all software is tested.” This truism is a simplification, of course, but the basic premise is this: the bugs will be exposed. The only question is “by whom?”

I submit for your consideration another truism, perhaps a corollary to the former: “all exceptions are handled.” Yes, ALL exceptions… and I’m not just talking about a top-level catch-all exception handler.

That requires further explanation. In order to fully back that up, let’s talk about what a typical exception handling mechanism really is.

According to Bjarne Stroustrup in his classic (“The C++ Programming Language”), “the purpose of the exception-handling mechanism is to provide a means for one part of a program to inform another part of a program that an ‘exceptional circumstance’ has been detected.”

Unfortunately, Stroustrup’s book was published a decade ago, and the consensus on the subject among software engineers has hardly been less vague since.

Really, the typical exception-handling mechanism enables a delegator to effectively reconcile an expression of non-compliance in its delegated tasks.

So exception-handling mechanisms usually have several parts. To begin with we have a task or process. Then, there’s a task supervisor. The supervisor is the method that contains the typical try-block. This try-block generally wraps or makes calls to the task. There’s the exception object, which is really the information that describes an occurrence of non-compliance… the excuse, if you will. An instance of an exception object is not the actual exception itself, but merely the expression of the actual exception. Finally, there’s the handler, which is the reconciliation plan that is hosted by the supervisor.

In many languages, supervisor code can have zero or more reconciliation plans, each for a particular class of exception. A handler can also throw or re-throw, meaning a succession of supervisors could potentially be informed of an insurrection.

So it can be said that there are actually zero or more reconciliation plans or handlers… within the program. How do “zero handlers” square with the bit about all exceptions being handled?

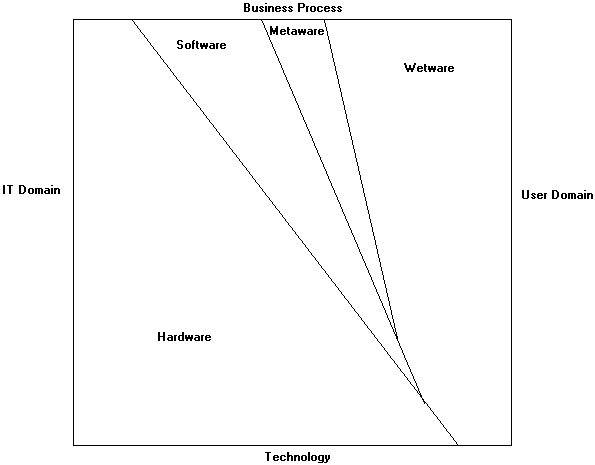

We developers easily forget that software is always a sub-process of another task. Software doesn’t run for its own entertainment. Software is social. It collaborates, and blurs the boundaries between processes. Software processes that are not subordinate to other software processes are subordinate to… (I’ll borrow a term) wetware. When software experiences a circumstance that it cannot reconcile, the software will invariably express non-compliance in some fashion to a supervisor. In many cases, the supervisor is appropriately software. In many other cases, the supervisor is wetware.

Regardless… whether the program goes up in a fireball of messages, locks hard, blinks ineffectively, or simply disappears unexpectedly; these acts of insubordination are expressed to wetware. Wetware always has the final reconciliation opportunity. A user can take any and all information he or she has on the subject of the exception to handle that non-compliance.

The user could do a number of things: They could report it to their superior. They could ask someone else to investigate. They could try again. Even if they choose to do nothing, they are making that “informed” decision… and there. That’s what I mean when I say “all exceptions are handled.”

Now don’t worry. I’m certainly not about to advocate unprotected code.

Unfortunately, we developers aren’t trusting types. We go out of our way to protect against exceptions everywhere. We write code in every procedure that secretively skips over processing something if there’s a broken dependency. We write exception handlers that quietly log the details or completely swallow exceptions.

There are occasions when “do nothing” is a reasonable reconciliation. Much of the time, however, we engineers are engaging in illicit cover-up tactics. Handling decisions get co-opted from real process authorities and made at levels where the best interests of the stakeholders are not considered. Often cover-ups add redundant checks that make code harder to understand, maintain, and fix. It actually becomes complexity that exceeds that of proper exception handling.

Where does this get us? You gotta be fair to your stakeholders.

Keeping all stakeholders in mind is critical to building an exception throwing and handling strategy, so let’s dig in on that a bit. The first stakeholder most everyone thinks of in just about any project, is the project sponsor. The business requirements most often need to support the needs of the project sponsor. The end user comes next, and things like client usability come to mind. Sometimes end users fall into categories. If your program is middleware, you might have back-end and front-end systems to communicate exceptions with. In some cases, you not only have to consider the supervisory needs of the end user, but also, potentially, a set of discrete back-end system administrators, each with unique needs.

Remember, however, that during project development, you, the developer, are the first wetware supervisor and stakeholder of your program’s activities. You’re human; you’re going introduce errors in the code. How do you want to find out about them?

Here’s a tip: the further away from the original author that a bug is discovered, the more expensive it is to fix.

You want mistakes to grab you and force you to fix them now. You do not want them to get past you, falsely pass integration tests, and come across to QA, or end users, as a logic flaw in seemingly unrelated code.

Again, in the typical cover-up strategy, dependency-checking code becomes clutter and adds complexity. You may find yourself spending almost as much time debugging dependency checks in cases like this, as actually fixing functional code. Part of a well-designed exception strategy is writing clean code that keeps dependency checking to a minimum.

One way to make that possible is to set up encapsulation boundaries. This is also a good practice for other reasons, including managing security. Top level processes delegate all their activities so they can supervise them. Validate resources and lock them as close to the start of your process as possible, and throw when it fails. Validate data and its schema when and where it enters the process, and throw when it fails. Validate authentication & authorization as soon as you can, and throw when it fails. Once you have your dependencies sanity-checked, clean processing code can begin processing.

Don’t forget that the UI is an edge. Not only should input be validated, but due to important needs of the user, reconciliation code that respects the user should be set in place.

Thrown exceptions, of course, need to be of well-known domain exception types that can be easily identified in the problem domain, and well handled. Don’t go throwing framework exceptions. It will be too easy to confuse a framework exception for an application exception. Some frameworks offer an “ApplicationException” designed for the purpose. I might consider inheriting from ApplicationException. Throwing an “ApplicationException” is probably not unique or descriptive enough within the problem domain to make the code understandable.

By taking an encapsulation boundary approach, lower level tasks cleanly assume their dependencies are valid. If a lower level task fails due to a failed assumption, you’ll know instantly that it’s a bug. You’ll know the bug is either right there on the failure point, or it’s on the edge where the dependency or resource was acquired.

Another important consideration in an exception-handling strategy: code reusability. It often makes sense to decouple a supervisor from its process. In this way, it becomes possible to apply consistent supervision over many different processes. Another interesting possibility that becomes possible in this manner is the idea of code execution with design-time or even run-time configurable supervision. Each different “supervisor” construct could respond to the informational needs of a different set of stakeholders. Finally, handling can be decoupled from supervision. This provides another way to make stakeholder needs configurable.

Ok, so I’m getting carried away a bit there… but you get what I’m saying.

By designing encapsulation boundaries, and by decoupling the various parts of exception handling, you can support effective supervision and reconciliation through each phase of the project life cycle via configuration. In this way, you can honor all the stakeholders during their tenure with the application, and write better software.

[3/12/08 Edit: I originally liked the term “trust boundaries”, because it focused on being able to trust dependencies, and also brought security as a dependency to mind, but “encapsulation boundary” is much more precise. Thanks, Kris!]

I was also a bit concerned that the security devices attached to the cell phones they had around the table were some sort of transponder to hide “vapor-ware” special effects. My own phone (an HTC Mogul by Sprint) was ignored when I placed it on the table.

I was also a bit concerned that the security devices attached to the cell phones they had around the table were some sort of transponder to hide “vapor-ware” special effects. My own phone (an HTC Mogul by Sprint) was ignored when I placed it on the table. The preview is over at AT&T today. According to Wikipedia, Microsoft expects they can get these down to consumer price ranges by 2010 (two years!).

The preview is over at AT&T today. According to Wikipedia, Microsoft expects they can get these down to consumer price ranges by 2010 (two years!).